Learning Compositional, Structural, and Actionable Visual Representations for 3D Shapes

Kaichun Mo

Ph.D. Student at Stanford University

Apr 11, 2022 (Mon), 10:30 a.m. KST | Zoom

Guest Lecture at CS492(A): Machine Learning for 3D Data

Minhyuk Sung, KAIST, Spring 2022

Google Calendar Link Zoom Link

Image credit: https://cs.stanford.edu/~kaichun

Abstract

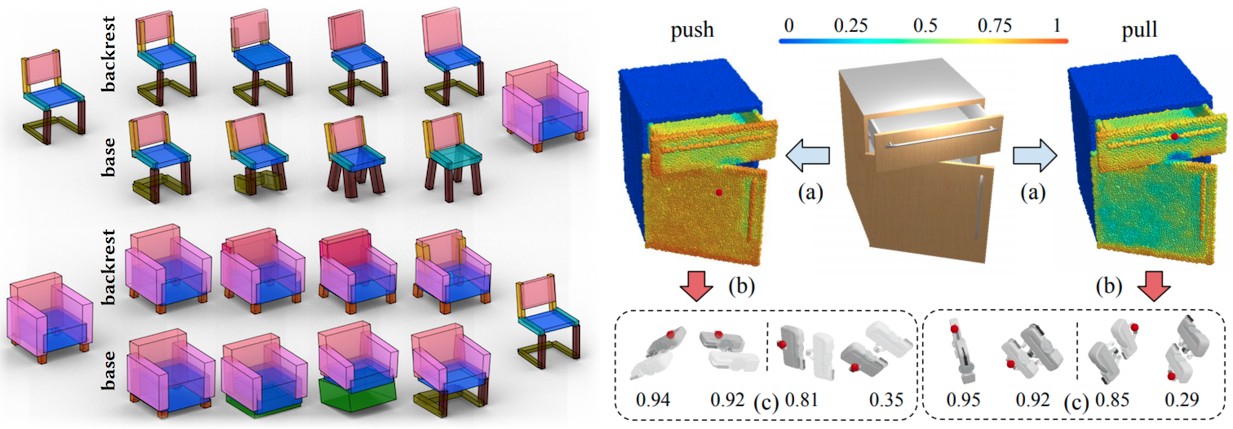

We humans accomplish everyday tasks by perceiving, understanding, and interacting with a wide range of 3D objects, with diverse geometry, rich semantics, and complicated structures. One fundamental goal of computational visual perception is to equip intelligent agents with similar capabilities. My Ph.D. research is motivated by exploring answers to one central research question -- what are good visual representations of 3D shapes for diverse downstream tasks in vision, graphics, and robotics, and how to develop general learning frameworks to learn them at large scales? In my talk, I will first talk about compositional approaches for 3D perception that smaller, simpler, and reusable subcomponents of 3D geometry and functionality, such as the parts of an object, are discovered and leveraged towards reducing the modeling complexity of the 3D data. Then, I will present some of my works on developing part-based and structure-aware generative models for 3D shape synthesis and editing applications. Thirdly, I will introduce my recent research exploring learning general visual actionable information for robotic manipulation over 3D shapes, such as visual affordance and trajectory proposals, via large-scale self-supervised learning from simulated interaction.

Bio

Kaichun Mo is a sixth-year (final-year) Ph.D. Student in Computer Science at Stanford University, advised by Prof. Leonidas Guibas. Before that, he received his BS.E. degree from the ACM Honored Class at Shanghai Jiao Tong University. His Ph.D. research interests focus on learning 3D shape visual representations for various applications in 3D vision, graphics, and robotics. He co-authored the work of PointNet, which pioneered 3D deep learning over point cloud data, led the efforts of building the PartNet dataset for understanding 3D shape compositional part structures, investigated novel problems and approaches for structure-aware 3D shape recognition and generation, and more recently explored learning visual actionable information (e.g. affordance, trajectories) over 3D geometry for robotic manipulation tasks. He has interned at Adobe Research, Autodesk Research (AI Lab), and Facebook AI Research. He has published papers at CVPR, ICCV, ECCV, NeurIPS, ICLR, CoRL, Siggraph Asia, AAAI. His webpage: https://cs.stanford.edu/~kaichun