ComplementMe: Weakly-Supervised Component Suggestions for 3D Modeling

Minhyuk Sung, Hao Su, Vladimir G. Kim, Siddhartha Chaudhuri, Leonidas Guibas

SIGGRAPH Asia 2017

Abstract

Assembly-based tools provide a powerful modeling paradigm for non-expert shape designers. However, choosing a component from a large shape repository and aligning it to a partial assembly can become a daunting task. In this paper we describe novel neural network architectures for suggesting complementary components and their placement for an incomplete 3D part assembly. Unlike most existing techniques, our networks are trained on unlabeled data obtained from public online repositories, and do not rely on consistent part segmentations or labels. Absence of labels poses a challenge in indexing the database of parts for the retrieval. We address it by jointly training embedding and retrieval networks, where the first indexes parts by mapping them to a low-dimensional feature space, and the second maps partial assemblies to appropriate complements. The combinatorial nature of part arrangements poses another challenge, since the retrieval network is not a function: several complements can be appropriate for the same input. Thus, instead of predicting a single output, we train our network to predict a probability distribution over the space of part embeddings. This allows our method to deal with ambiguities and naturally enables a UI that seamlessly integrates user preferences into the design process. We demonstrate that our method can be used to design complex shapes with minimal or no user input. To evaluate our approach we develop a novel benchmark for component suggestion systems demonstrating significant improvement over state-of-the-art techniques.

Minhyuk Sung, Hao Su, Vladimir G. Kim, Siddhartha Chaudhuri, Leonidas Guibas

ComplementMe: Weakly-Supervised Component Suggestions for 3D Modeling

SIGGRAPH Asia 2017

arXiv |

Paper |

Slides(PPTX) |

Code

Bibtex

@article{Sung:2017,

author = {Sung, Minhyuk and Su, Hao and Kim, Vladimir G. and Chaudhuri, Siddhartha and Guibas, Leonidas},

title = {Complement{Me}: Weakly-Supervised Component Suggestions for 3D Modeling},

Journal = {ACM Transactions on Graphics (Proc. of SIGGRAPH Asia)},

year = {2017}

}

Data download

We provide ShapeNet model component and semantic part data used in the paper. You can directly download the point cloud and contact graph data in the following links:

- Components (877M)

Point cloud and contact graph data of components (generated as described in Sec 4. of the paper). Refer to the included README file for the details of the data structure. - Semantic parts (220M)

Point cloud data of semantic parts. This is a subset of ShapeNet semantic part dataset created by [Yi et al. 2016]. Refer to the included README file for the details of the data structure.

The mesh data is only provided to people who have already signed up for ShapeNet and have agreed to the Terms of Use. To download the mesh data, please fill out an agreement to the ShapeNet Terms of Use with the ShapeNet account email address, and send it to mhsung@cs.stanford.edu.

Please cite our paper and all related papers if you use this dataset in your research.

Video

Overview & Results

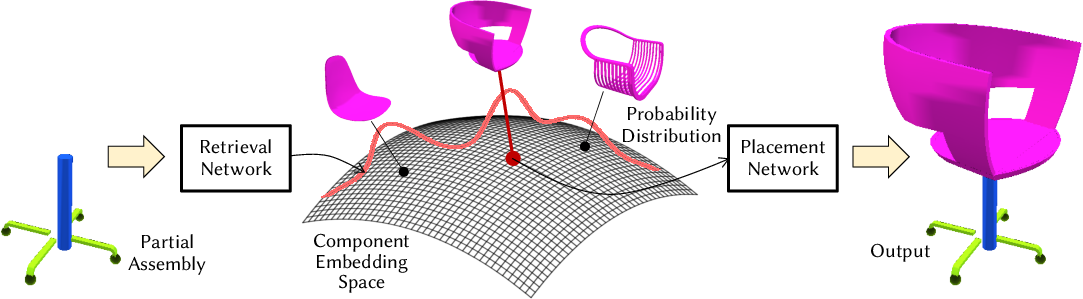

Fig. 2. Overview of the retrieval process at test time. From the given partial assembly, the retrieval network predicts a probability distribution (modeled as a mixture of Gaussians) over the component embedding space. Suggested complementary components are sampled from the predicted distribution, and then the placement network predicts their positions with respect to the query assembly.

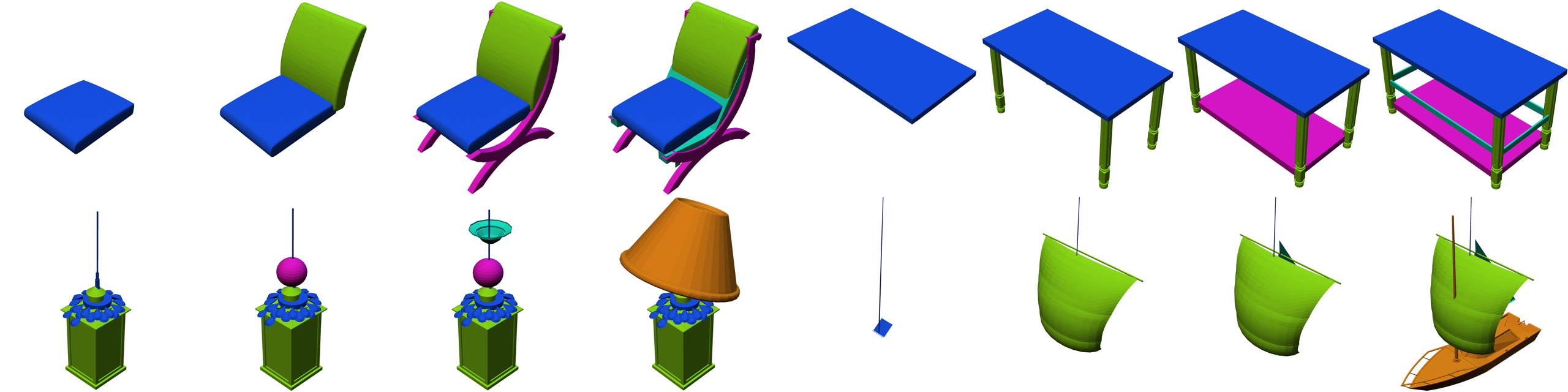

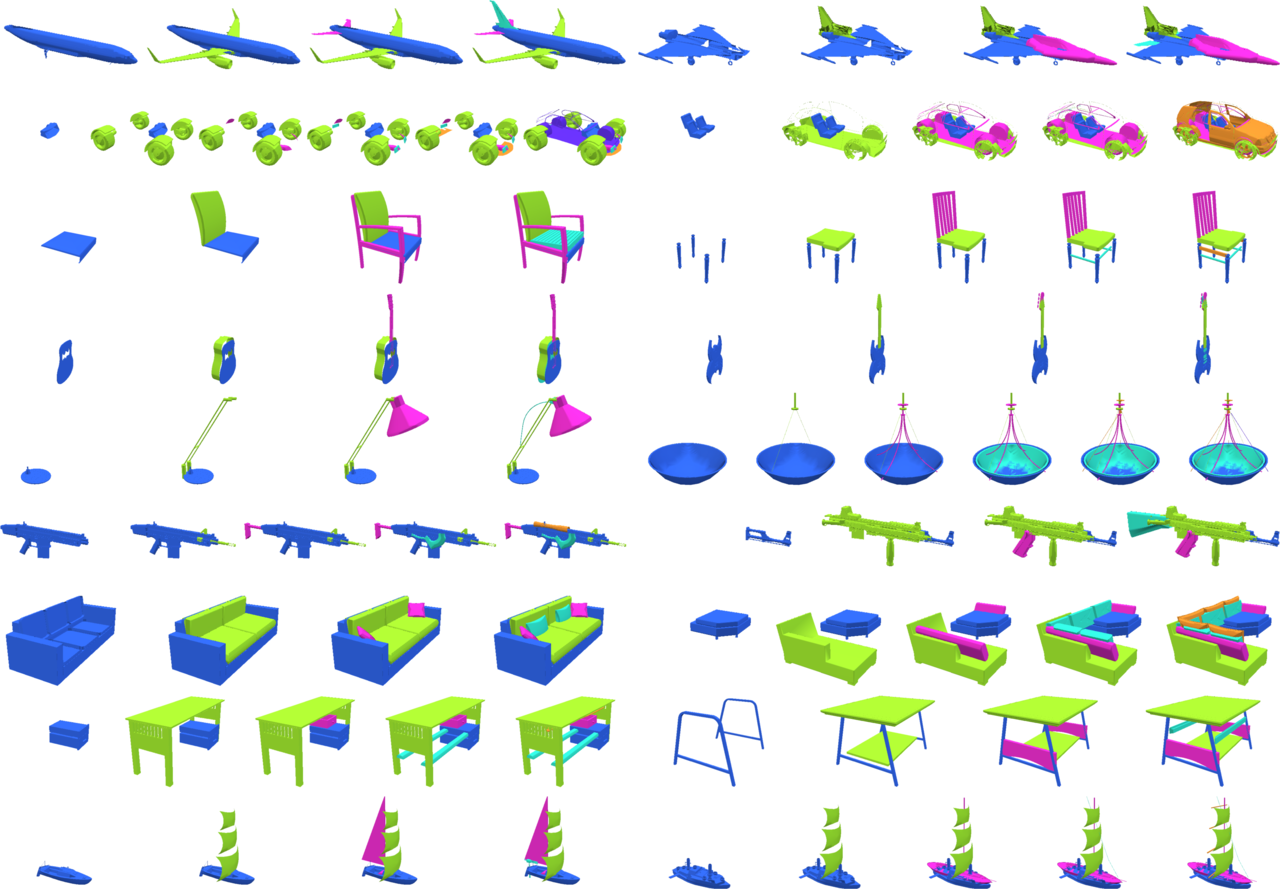

Fig. 7. Automatic iterative assembly results from a single component. Small component not typically labeled with semantics in the shape database (e.g. as slats between chair/table legs, pillows on sofas, cords in lamps/watercrafts) are appropriately retrieved and placed.

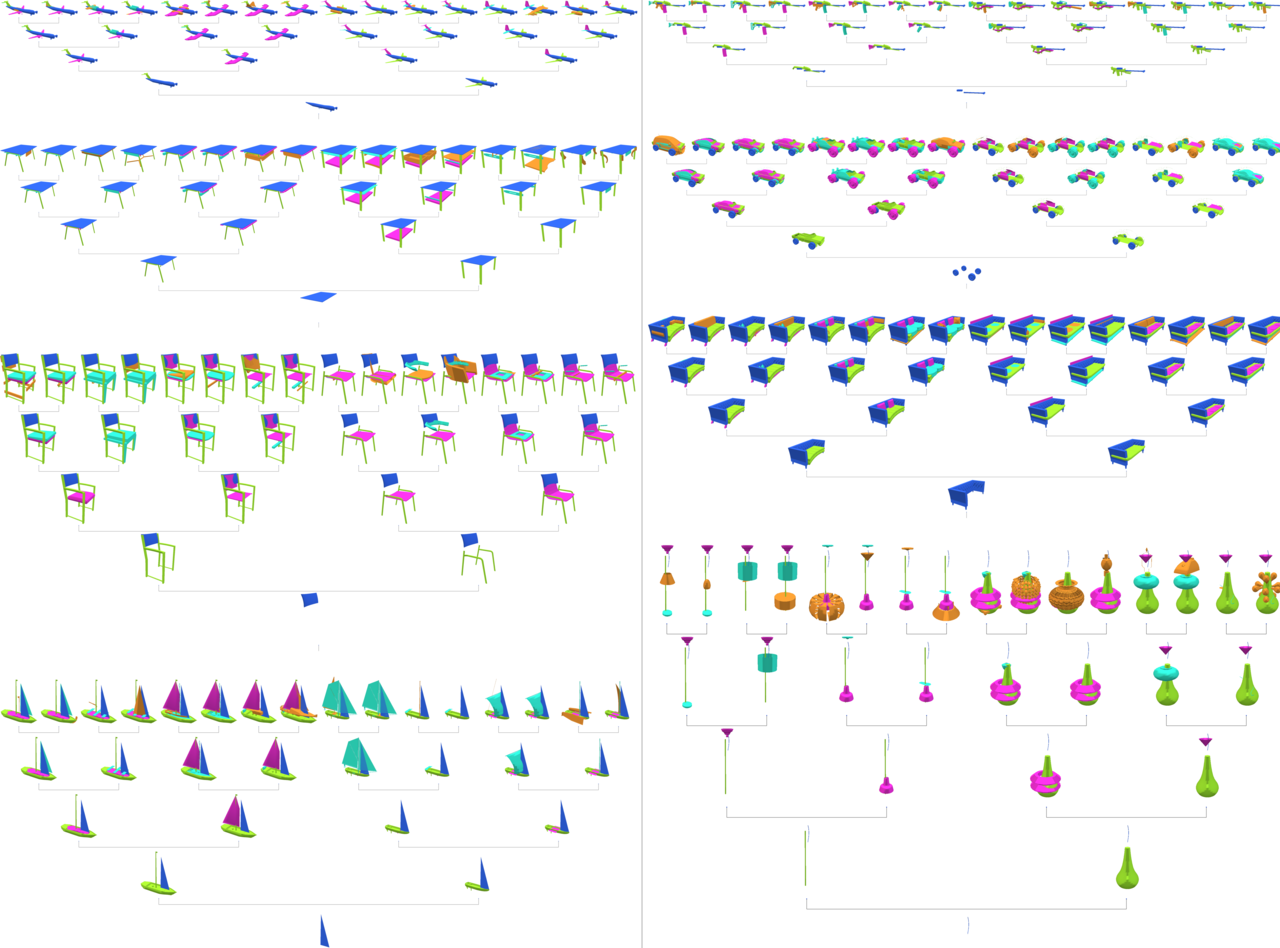

Fig. 8. Automatic iterative assembly with two different random choices at every time. From the initial component at the bottom, various objects are synthesized by assembling different components.

More Results

Automatic shape synthesis with maximal probability components (More examples of Fig.7.)

| Airplane | Car | Chair | Guitar | Lamp | Rifle | Sofa | Table | Watercraft |

Automatic shape synthesis with two different random choices each time (More examples of Fig.8.)

| Airplane | Car | Chair | Guitar | Lamp | Rifle | Sofa | Table | Watercraft |

Acknowledgements

This project was supported by NSF grants IIS-1528025 and DMS-1521608, MURI award N00014-13-1-0341, a Google focused research award, the Korea Foundation for Advanced Studies, and gifts from the Adobe systems and Autodesk corporations.

[Yi et al.2016] Li Yi, Vladimir G. Kim, Duygu Ceylan, I-Chao Shen, Mengyan Yan, Hao Su, Cewu Lu, Qixing Huang, Alla Sheffer, and Leonidas Guibas, “A Scalable Active Framework

for Region Annotation in 3D Shape Collections”, SIGGRAPH Asia 2016.

[ShapeNet] http://shapenet.cs.stanford.edu/