DeepMetaHandles: Learning Deformation Meta-Handles of 3D Meshes with Biharmonic Coordinates

Minghua Liu, Minhyuk Sung, Radomír Měch, Hao Su

CVPR 2021 (Oral)

Abstract

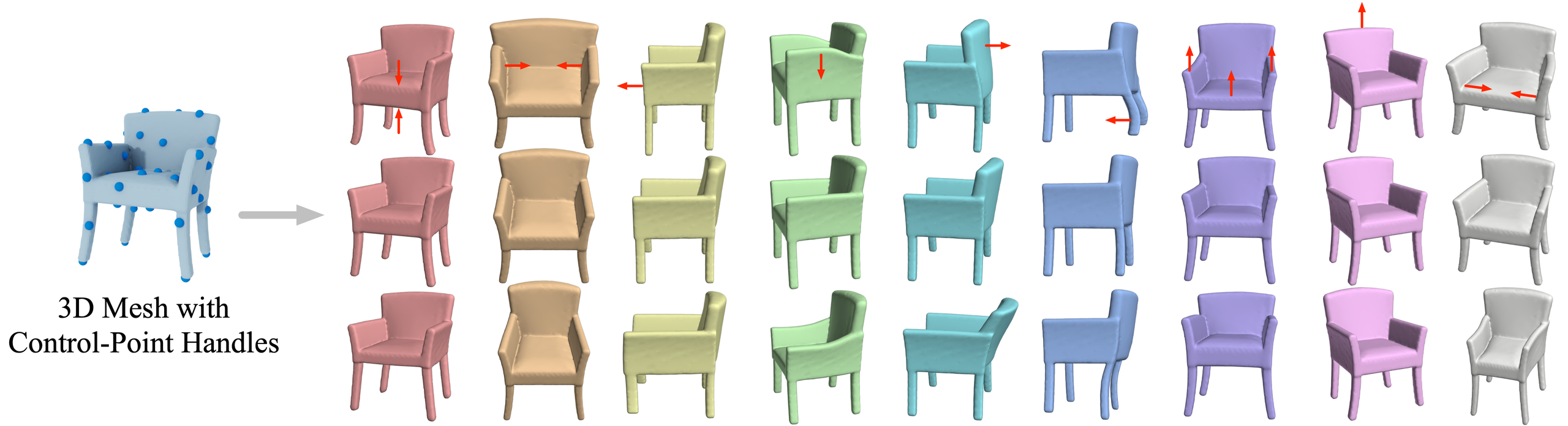

We propose DeepMetaHandles, a 3D conditional generative model based on mesh deformation. Given a collection of 3D meshes of a category and their deformation handles (control points), our method learns a set of meta-handles for each shape, which are represented as combinations of the given handles. The disentangled meta-handles factorize all the plausible deformations of the shape, while each of them corresponds to an intuitive deformation. A new deformation can then be generated by sampling the coefficients of the meta-handles in a specific range. We employ biharmonic coordinates as the deformation function, which can smoothly propagate the control points’ translations to the entire mesh. To avoid learning zero deformation as meta-handles, we incorporate a target-fitting module which deforms the input mesh to match a random target. To enhance deformations’ plausibility, we employ a soft-rasterizer-based discriminator that projects the meshes to a 2D space. Our experiments demonstrate the superiority of the generated deformations as well as the interpretability and consistency of the learned meta-handles.

Minghua Liu, Minhyuk Sung, Radomír Měch, Hao Su

DeepMetaHandles: Learning Deformation Meta-Handles of 3D Meshes with Biharmonic Coordinates

CVPR 2021 (Oral)

arXiv |

Code

Bibtex

@proceedings{DeepMetaHandles:2021,

author = {Liu, Minghua and Sung, Minhyuk and M\v{e}ch, Radom\'{i}r and Su, Hao},

title = {DeepMetaHandles: Learning Deformation Meta-Handles of 3D Meshes with Biharmonic Coordinates},

booktitle = {CVPR},

year = {2021}

}

Interactive Shape Editing Demo

Move the meta-handle sliders on the right panel to edit the shapes jointly.

Drag on shapes to change the viewpoint.

Meta-Handle Results

Each row shows deformations of meta-handles with the same index for different shapes.

Scroll down for more results.

Acknowledgements

This work is supported in part by gifts from Adobe, Kwai, Qualcomm, and Vivo.